I am happy to share that I received the Complexity Best Presentation Award at the 2nd Days of Applied Nonlinearity and Complexity (DANOC) for my talk

“Anomalous diffusion in soccer: Can we characterize a team’s playing style?», from a joint work with Miguel Á. García March and Ferran Heredia.

DANOC was a three-day online conference aimed at advancing discussion on recent developments in nonlinearity and complexity. The event has brought together theory and applications in creative ways, exploring the boundaries between different nonlinear scientific fields. The conference was designed as a platform for vigorous scientific exchange on nonlinearity and complexity and is organized into four sessions, with two sessions per day.

I’m grateful to the organizers and participants for the stimulating discussions and for recognizing our work.

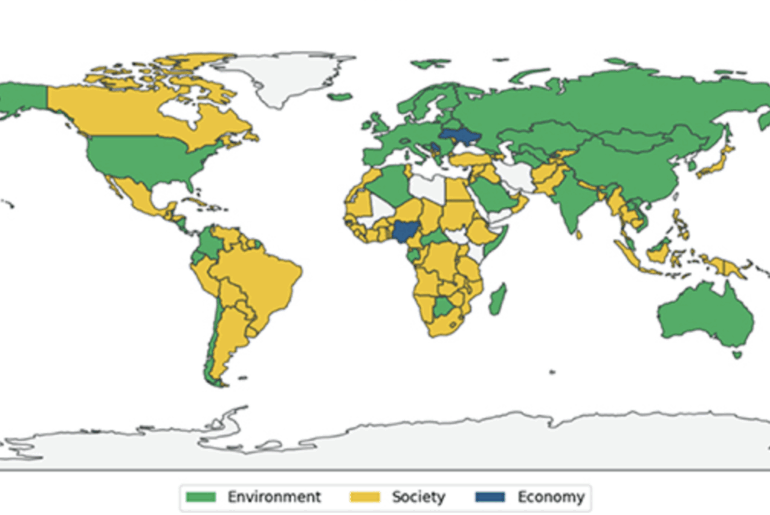

Accurate, reliable and updated information support effective decision-making by reducing uncertainty and enabling informed choices. Multiple crises threaten the sustainability of our societies and pose at risk the planetary boundaries, hence requiring usable and operational knowledge. Natural-language processing tools facilitate data collection, extraction and analysis processes. They expand knowledge utilization capabilities by improving access to reliable sources in shorter time. They also identify patterns of similarities and contrasts across diverse contexts. We apply general and domain-specific large language models (LLMs) to two case studies and we document appropriate uses and shortcomings of these tools for two tasks: classification and sentiment analysis of climate and sustainability documents. We study both statistical and prompt-based methods. In the first case study, we use LLMs to assess whether climate pledges trigger cascade effects in other sustainability dimensions. In the second use case, we use LLMs to identify interactions between the sustainable development goals and detects the direction of their links to frame meaningful policy implications. We find that LLMs are successful at processing, classifying and summarizing heterogeneous text-based data helping practitioners and researchers accessing. LLMs detect strong concerns from emerging economies in addressing food security, water security and urban challenges as primary issues. Developed economies, instead, focus their pledges on the energy transition and climate finance. We also detect and document four main limits along the knowledge production chain: interpretability, external validity, replicability and usability. These risks threaten the usability of findings and can lead to failures in the decision-making process. We recommend risk mitigation strategies to improve transparency and literacy on artificial intelligence (AI) methods applied to complex policy problems. Our work presents a critical but empirically grounded application of LLMs to climate and sustainability questions and suggests avenues to further expand controlled and risk-aware AI-powered computational social sciences.

Accurate, reliable and updated information support effective decision-making by reducing uncertainty and enabling informed choices. Multiple crises threaten the sustainability of our societies and pose at risk the planetary boundaries, hence requiring usable and operational knowledge. Natural-language processing tools facilitate data collection, extraction and analysis processes. They expand knowledge utilization capabilities by improving access to reliable sources in shorter time. They also identify patterns of similarities and contrasts across diverse contexts. We apply general and domain-specific large language models (LLMs) to two case studies and we document appropriate uses and shortcomings of these tools for two tasks: classification and sentiment analysis of climate and sustainability documents. We study both statistical and prompt-based methods. In the first case study, we use LLMs to assess whether climate pledges trigger cascade effects in other sustainability dimensions. In the second use case, we use LLMs to identify interactions between the sustainable development goals and detects the direction of their links to frame meaningful policy implications. We find that LLMs are successful at processing, classifying and summarizing heterogeneous text-based data helping practitioners and researchers accessing. LLMs detect strong concerns from emerging economies in addressing food security, water security and urban challenges as primary issues. Developed economies, instead, focus their pledges on the energy transition and climate finance. We also detect and document four main limits along the knowledge production chain: interpretability, external validity, replicability and usability. These risks threaten the usability of findings and can lead to failures in the decision-making process. We recommend risk mitigation strategies to improve transparency and literacy on artificial intelligence (AI) methods applied to complex policy problems. Our work presents a critical but empirically grounded application of LLMs to climate and sustainability questions and suggests avenues to further expand controlled and risk-aware AI-powered computational social sciences.